| Interested practitioners, developers and researchers are hereby invited to present a paper at the fifth annual conference focused on the application of Semantic Technologies to Information Systems and the Web. The event will be held on June 14-18, 2009 at the Fairmont Hotel in San Jose, California. The conference will comprise multiple educational sessions, including tutorials, technical topics, business topics, and case studies. We are particularly seeking presentations on currently implemented applications of semantic technology in both the enterprise and internet environments. A number of appropriate topic areas are identified below. Speakers are invited to offer additional topic areas related to the subject of Semantic Technology if they see fit. The conference is designed to maximize cross-fertilization between those who are building semantically-based products and those who are implementing them. Therefore, we will consider research and/or academic treatments, vendor and/or analyst reports on the state of the commercial marketplace, and case study presentations from developers and corporate users. For some topics we will include introductory tutorials. The conference is produced by Semantic Universe, a joint venture of Wilshire Conferences, Inc. and Semantic Arts, Inc. Audience The 2008 conference drew over 1000 attendees. We expect to increase that attendance in 2009. The attendees, most of whom were senior and mid-level managers, came from a wide range of industries and disciplines. About half were new to Semantics and we expect that ratio to be the same this year. When you respond, indicate whether your presentation is appropriate for those new to the field, only to experienced practitioners, and whether it is more technical or business-focused (we're looking for a mix). Tracks (Topic Areas) The conference program will include 60-minute, six-hour, and three-hour presentations on the following topics: Business and Marketplace

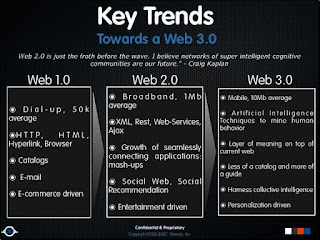

Industry trends, market outlook, business and investment opportunities. Collaboration and Social Networks

Leveraging Web 2.0 in semantic systems. FOAF, Semantically-Interlinked Online Communities (SIOC), wikis, tagging, folksonomies, data portability. Data Integration and Mashups

;Web-scale data integration, semantic mashups, disparate data access, scalability, database requirements, Linked Data, data transformations, XML. Developing Semantic Applications

Experienced reports or prototypes of specific applications that demonstrate automated semantic inference. Frameworks, platforms, and tools used could include: Wikis, Jena, Redland, JADE, NetKernal, OWL API, RDF, GRDDL, Ruby On Rails, AJAX, JSON, Microformats, Process Specification Language (PSL), Atom, Yahoo! Pipes, Freebase, Powerset, and Twine. Foundational Topics

This will include the basics of Semantic Technology for the beginner and/or business user including knowledge representation, open world reasoning, logical theory, inference engines, formal semantics, ontologies, taxonomies, folksonomies, vocabularies, assertions, triples, description logic, semantic models. Knowledge Engineering and Management

Knowledge management concepts, knowledge acquisition, organization and use, building knowledge apps, artificial intelligence. Ontologies and Ontology Concepts

Ontology definitions, reasoning, upper ontologies, formal ontologies, ontology standards, linking and reuse of ontologies, and ontology design principles. Semantic Case Studies and Web 3.0

Report on applications that use explicit semantic information to change their appearance or behavior, aka "dynamic apps". Web 3.0 applications. Consumer apps, business apps, research apps. Semantic Integration

Includes semantic enhancement of Web services, standards such as OWL/S, WSDL/S, WSMO and USDL, semantic brokers. Semantic Query

Advances in semantically-based federated query, query languages such as SWRL, SPARQL, query performance, faceted query, triple stores, scalability issues. Semantic Rules

Business Rules, logic programming, production rules, Prolog-like systems, use of Horn rules, inference rules, RuleML, Semantics of Business Vocabulary and Business Rules(SBVR). Semantic Search

Different approaches to semantic search in the enterprise and on the web, successful application examples, tools (such as Sesame), performance and relevance/accuracy measures, natural language search, faceted search, visualization. Semantic SOA (Service Oriented Architectures)

Semantic requirements within SOA, message models and design, canonical model development, defining service contracts, shared business services, discovery processes. Semantic Web

OWL/RDF and Semantic Web rule and query languages such as SWRL, SPARQL and the like. Includes linked data. Also progress of policy and trust. Semantics for Enterprise Information Management (EIM)

Where and how semantic technology can be used in Enterprise Information Management. Applications such as governance, data quality, decision automation, reporting, publishing, search, enterprise ontologies. Business Ontologies

Design and deployment methods, best practices, industry-specific ontologies, case studies, ontology-based application development, ontology design tools, ontology-based integration. Taxonomies

Design and development approaches, tools, underlying disciplines for practitioners, vocabularies, taxonomy representation, taxonomy integration, relationship to ontologies. Unstructured Information

This will include entity extraction, Natural Language Processing, social tagging, content aggregation, knowledge extraction, metadata acquisition, text analytics, content and document management, multi-language processing, GRDDL. Other

You are welcome to suggest other topic areas. Key Dates & Speaker Deadlines | Proposal Submissions Due

All proposals must be submitted via the online Call for Papers process HERE. | November 24, 2008 | | Speakers notified of selection | December 16, 2008 | | Speaker PowerPoint files due | May 18, 2009 | |

It is regrettable indeed. I was deeply saddened and somewhat enraged by the Wall Street Journal's closing of its library. In our information age, that depends so much on knowledge workers, Wall Street has decided that it could cut back taking away a vital piece of information news gathering, organizing, and dissemination of up-to-minute information. Can news reporters expect to do all the work themselves? Can they properly search for relevant and pertinent information? Is that even their jobs?

It is regrettable indeed. I was deeply saddened and somewhat enraged by the Wall Street Journal's closing of its library. In our information age, that depends so much on knowledge workers, Wall Street has decided that it could cut back taking away a vital piece of information news gathering, organizing, and dissemination of up-to-minute information. Can news reporters expect to do all the work themselves? Can they properly search for relevant and pertinent information? Is that even their jobs?

Archival programs

Archival programs

World Cafés

World Cafés